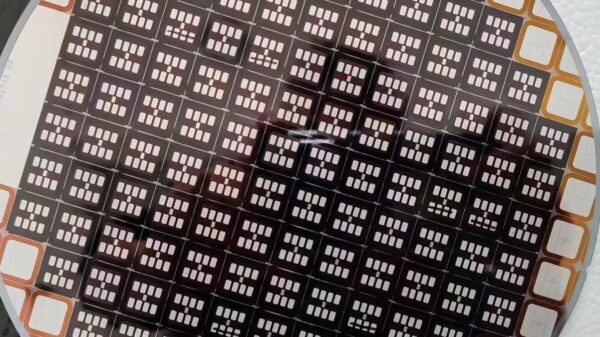

In a significant stride for the future of artificial intelligence (AI) hardware, Samsung Electronics has presented pioneering memory semiconductor technologies at this year's Hot Chips conference, a prestigious gathering for the semiconductor sector held annually at Stanford University.

The symposium, which took place from the 27th to the 29th of August, drew influential industry players such as SK Hynix, Intel, AMD, and Nvidia, and served as a platform for cutting-edge technological unveilings. Samsung Electronics distinguished itself by debuting its research findings on two revolutionary memory types—High-Bandwidth Memory Processing-In-Memory (HBM-PIM) and Low Power Double. . .